Machines Maketh Us.

The list of the most outrageous/exciting/pushing the frontiers of science/fantastical/I-am-running-out-of-adjective kind of scientific discoveries has just got a little smaller. A feat accomplished by a multi-disciplinary group of neurosurgeons, computer scientists, and electrophysiologists have struck off ‘bionic man’ from the list of impossible dreams.

In the latest issue of Nature, John Donoghue a neuroscientist from Brown University and a pioneer in this field along with other colleagues have reported a successful control of a computer cursor by a paralyzed man by the just his ‘thoughts’.

Matt Nagle who was paralyzed neck below after a knifing incident, has a microchip implanted in his brain and a wire coming out of his head connecting him to a computer. He is able to move the cursor with just his intention to do so. He is able to send emails, play basic games, control his TV and draw a circle with modified painting software. He was able to do this with minimal training.

Technically known as NMP (Neuroprosthetic Motor Prostheses), these interfaces uses signals from the brain to drive prosthetic devises. They come under a large category of BCI (Brain Computer Interface), which interfaces any part of the brain to machines. The NMPs uses signals from a specific area of the brain which controls all movements of our body called motor cortex.

How does this work?

legend for pic is given below

It starts with implantation of a chip in the area of the brain that controls movement and recording activity when he is asked to ‘move the cursor’ on the screen in front of him. The cursor is actually being moved by someone else, he traces the movement mentally, that is his brain is sending the required information to the muscles for the particular movement of the cursor. Although he can’t move any of his muscles, there will be activity in the motor cortex which has information for the movement, like direction. Then this pattern of activity will be fed to an algorithm that separates these signals into intentions - for example- assigning the pattern of activity of ‘move left’ into instructions for the cursor to ‘move left’. Then another algorithm has to detect these patterns and changes them into coordinates for the position of the cursor on the screen.

It starts with implantation of a chip in the area of the brain that controls movement and recording activity when he is asked to ‘move the cursor’ on the screen in front of him. The cursor is actually being moved by someone else, he traces the movement mentally, that is his brain is sending the required information to the muscles for the particular movement of the cursor. Although he can’t move any of his muscles, there will be activity in the motor cortex which has information for the movement, like direction. Then this pattern of activity will be fed to an algorithm that separates these signals into intentions - for example- assigning the pattern of activity of ‘move left’ into instructions for the cursor to ‘move left’. Then another algorithm has to detect these patterns and changes them into coordinates for the position of the cursor on the screen.

This work is a culmination of animal studies done earlier by many groups. An exhaustive list will look like a review for IEEE, and so I will just refer to a couple of experiments done by Nicolelis and group at Duke University who is well known in this field.

In a paper published in PLOS in 2004, Nicolelis trained monkeys to use a cursor to reach a target on the computer screen by moving a joystick. They were also trained to grip the joystick with varying force according to size of a cursor in the screen. The monkey had to vary the force and velocity to reach to do the task. They then recorded from various parts of the motor, premotor (required for planning for movement) and somatosensory area (where perceptions of touch arises, this is needed because touch feedback is essential for movement) while the monkey was doing this task. They used an Artificial Neural Network to extract information about hand grip force and velocity of hand trajectory from the brain recording. They then moved the cursors with these signals alone. The monkeys soon realized that they don’t have to move their hand to move the cursor, all they had to do was to think of doing it and they stopped using their hands. The brain signals were also used to drive a robotic arm which used parameters derived from the recorded activity alone. This study showed the possibility of using brain signals from paralyzed patients to drive bionic arms.

In earlier work published in Nature Neuroscience the same group trained rats to press a lever with their paws to obtain a water reward from a spout (the authors call it robotic arm). Simultaneously neuronal activity patterns from motor cortex (and other areas in thalamus) were recorded. These neuronal activity patterns were put through mathematical transformations to convert them into signals to control the spout. Rats then had a choice to press the lever or just ‘think’ of pressing the lever and the program would convert those signals to move the spout to give them water. After repeated trials the rats reduced or stopped pressing the lever. They got water by just ‘thought’.(Lazy rats).

Although this is exciting the situation is akin to the prototype Hondas one sees in Motor expos. There is a long way to go before it is implemented in patients routinely. There are a handful of groups around the world (and we are one) working on developing BCIs for the paralyzed, what separates them is the area in the nervous system where they choose to record neuronal activity from and the kind of algorithm they use to extract signals that can be used to control.

But whatever it is now, its exciting times ahead.

------------------------------------------------------------------------------------------------

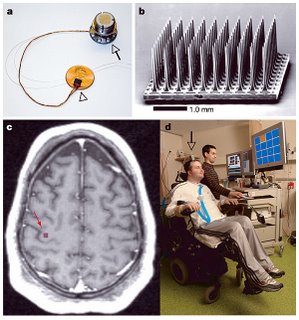

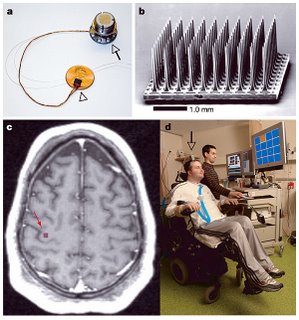

a- chip on the penny and connector which will be fixed to the skull

In the latest issue of Nature, John Donoghue a neuroscientist from Brown University and a pioneer in this field along with other colleagues have reported a successful control of a computer cursor by a paralyzed man by the just his ‘thoughts’.

Matt Nagle who was paralyzed neck below after a knifing incident, has a microchip implanted in his brain and a wire coming out of his head connecting him to a computer. He is able to move the cursor with just his intention to do so. He is able to send emails, play basic games, control his TV and draw a circle with modified painting software. He was able to do this with minimal training.

Technically known as NMP (Neuroprosthetic Motor Prostheses), these interfaces uses signals from the brain to drive prosthetic devises. They come under a large category of BCI (Brain Computer Interface), which interfaces any part of the brain to machines. The NMPs uses signals from a specific area of the brain which controls all movements of our body called motor cortex.

How does this work?

legend for pic is given below

It starts with implantation of a chip in the area of the brain that controls movement and recording activity when he is asked to ‘move the cursor’ on the screen in front of him. The cursor is actually being moved by someone else, he traces the movement mentally, that is his brain is sending the required information to the muscles for the particular movement of the cursor. Although he can’t move any of his muscles, there will be activity in the motor cortex which has information for the movement, like direction. Then this pattern of activity will be fed to an algorithm that separates these signals into intentions - for example- assigning the pattern of activity of ‘move left’ into instructions for the cursor to ‘move left’. Then another algorithm has to detect these patterns and changes them into coordinates for the position of the cursor on the screen.

It starts with implantation of a chip in the area of the brain that controls movement and recording activity when he is asked to ‘move the cursor’ on the screen in front of him. The cursor is actually being moved by someone else, he traces the movement mentally, that is his brain is sending the required information to the muscles for the particular movement of the cursor. Although he can’t move any of his muscles, there will be activity in the motor cortex which has information for the movement, like direction. Then this pattern of activity will be fed to an algorithm that separates these signals into intentions - for example- assigning the pattern of activity of ‘move left’ into instructions for the cursor to ‘move left’. Then another algorithm has to detect these patterns and changes them into coordinates for the position of the cursor on the screen.This work is a culmination of animal studies done earlier by many groups. An exhaustive list will look like a review for IEEE, and so I will just refer to a couple of experiments done by Nicolelis and group at Duke University who is well known in this field.

In a paper published in PLOS in 2004, Nicolelis trained monkeys to use a cursor to reach a target on the computer screen by moving a joystick. They were also trained to grip the joystick with varying force according to size of a cursor in the screen. The monkey had to vary the force and velocity to reach to do the task. They then recorded from various parts of the motor, premotor (required for planning for movement) and somatosensory area (where perceptions of touch arises, this is needed because touch feedback is essential for movement) while the monkey was doing this task. They used an Artificial Neural Network to extract information about hand grip force and velocity of hand trajectory from the brain recording. They then moved the cursors with these signals alone. The monkeys soon realized that they don’t have to move their hand to move the cursor, all they had to do was to think of doing it and they stopped using their hands. The brain signals were also used to drive a robotic arm which used parameters derived from the recorded activity alone. This study showed the possibility of using brain signals from paralyzed patients to drive bionic arms.

In earlier work published in Nature Neuroscience the same group trained rats to press a lever with their paws to obtain a water reward from a spout (the authors call it robotic arm). Simultaneously neuronal activity patterns from motor cortex (and other areas in thalamus) were recorded. These neuronal activity patterns were put through mathematical transformations to convert them into signals to control the spout. Rats then had a choice to press the lever or just ‘think’ of pressing the lever and the program would convert those signals to move the spout to give them water. After repeated trials the rats reduced or stopped pressing the lever. They got water by just ‘thought’.(Lazy rats).

Although this is exciting the situation is akin to the prototype Hondas one sees in Motor expos. There is a long way to go before it is implemented in patients routinely. There are a handful of groups around the world (and we are one) working on developing BCIs for the paralyzed, what separates them is the area in the nervous system where they choose to record neuronal activity from and the kind of algorithm they use to extract signals that can be used to control.

But whatever it is now, its exciting times ahead.

------------------------------------------------------------------------------------------------

a- chip on the penny and connector which will be fixed to the skull

b- chip under electron microscope

c-MRI of Matt's brain, red square shows area where the chip is implanted

d-Matt connected to the setup

Donoghue has started a company ..this is their URL http://www.cyberkineticsinc.com/

The experiment videos are available on the website, so is the original paper for free and some news item about it.

http://www.nature.com/nature/focus/brain/experiments/videopage1.html

The experiment videos are available on the website, so is the original paper for free and some news item about it.

http://www.nature.com/nature/focus/brain/experiments/videopage1.html

2 Comments:

aah... a convenient advert for the work in our lab. :)

PB

http://idiosyncrasy.reiffblogs.com

That was a subtle ad:-)

No neons, no models.

Post a Comment

<< Home